Title: Robust Network Intrusion Detection Through Explainable Artificial Intelligence (XAI)

Authors: Pieter Barnard, Nicola Marchetti, Luiz A. DaSilva

What’s this paper all about?

In the past few years, Artificial Intelligence (AI) has become the go-to approach for devising solutions that can optimally operate and manage our networks. However, despite the significant gains in performance that AI tends to bring, its adoption into critical areas of the networking field, such as cybersecurity, have been severely hampered due to an inherent lack of interpretability which many of these solutions suffer from, i.e., operators and analysts cannot determine why or how the AI algorithm arrives at its final decisions. Recently, a suite of techniques known as eXplainable AI (XAI) have been developed which allow analysts to scrutinise and reverse engineer parts of a ‘black-box’ algorithm in order to gain insights into its internal decision-making processes.

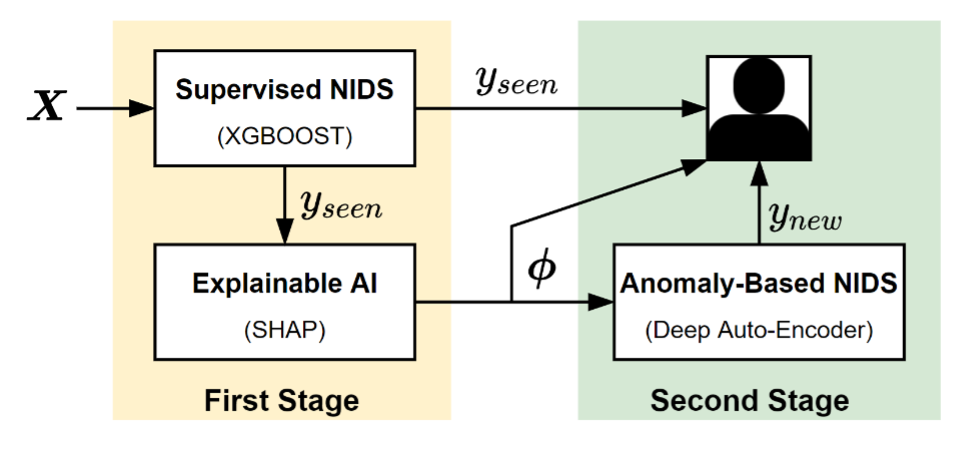

In this work, we investigate the benefits of applying explainable AI tools to an algorithm for network intrusion detection. Unlike previous works in this space, which have focused solely on the benefits that XAI brings to the human analyst, our work is among the first to explore the benefits of employing AI tools to analyse the explanations of the intrusion detection algorithm.

What exactly have you discovered?

Our findings reveal that AI techniques, such as those based on unsupervised learning, are highly effective at extracting important features from the explanations of our intrusion detection system, and that these features in turn, can be used to significantly enhance the ability of the intrusion algorithm to detect “zero-day” attacks (i.e., attacks that are unknown during the training stage), as well as to improve the overall performance of the intrusion system.

For example, an analysis on the popular NSL-KDD (Network Security Laboratory – Knowledge Discovery in Databases) intrusion dataset shows that our final solution (i.e., after explanations from the initial intrusion detection have been analysed using unsupervised AI, and the extracted information used to enhance the decisions of the overall system) is capable of achieving an overall accuracy of 93%, compared to an accuracy of only 79% in the original intrusion algorithm (i.e., without any XAI). Moreover, our final solution is able to out-perform numerous state-of-the-art works in terms of metrics, such as accuracy, recall and precision.

So what?

Cybersecurity, and in particular network intrusion detection, continues to play an important role in the reliable operation and privacy of our networks. One of the biggest challenges in this area is the problem of developing intrusion detection systems which can achieve high detection accuracy at deployment time, as well as automatically adapt to the emergence of zero-day attacks over time. In addition to providing a solution to the lack of interpretability barrier typically associated with AI-based intrusion systems, our work offers fresh insights into the potential use for XAI coupled with unsupervised AI tools to detect zero-day attacks in an automated fashion, while significantly increasing the overall performance of the detection system.

Link to full paper: https://www.techrxiv.org/articles/preprint/Robust_Network_Intrusion_Detection_through_Explainable_Artificial_Intelligence_XAI_/20027594

CONNECT is the world leading Science Foundation Ireland Research Centre for Future Networks and Communications. CONNECT is funded under the Science Foundation Ireland Research Centres Programme and is co-funded under the European Regional Development Fund. We engage with over 35 companies including large multinationals, SMEs and start-ups. CONNECT brings together world-class expertise from ten Irish academic institutes to create a one-stop-shop for telecommunications research, development and innovation.

Paper HighlightResearch Highlights